Project summary

Flooding is one of New Zealand’s most damaging hazards. This research programme is supporting the changes that are needed to our flood risk management. The overall aim of the programme is to provide the first, high-resolution flood hazard assessment for every catchment in the country. The research is producing New Zealand’s first consistent, publicly available national flood map, showing where flooding is likely to occur and how vulnerable our assets and taonga are.

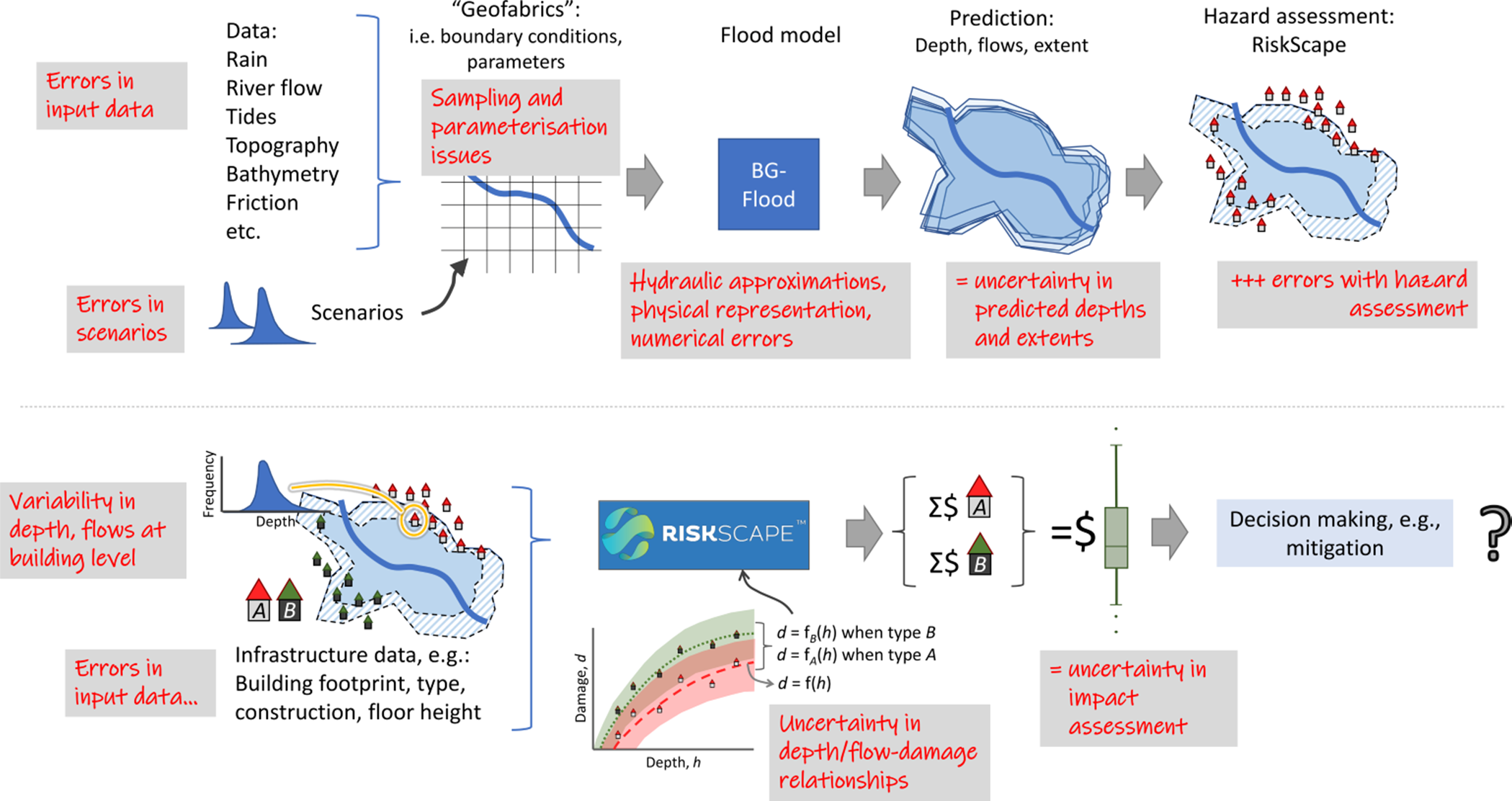

Prof. Matt Wilson is leading the cross-programme uncertainty theme for Mā te Haumaru ō te Wai. This research aims to advance our understanding and handling of the uncertainties which are present in predictions of flood inundation. Flood risk and other planning practitioners worldwide often use the outputs from flood modelling as part of their decision-making, such as when they determine flood hazard zones, design mitigation measures, or assess the potential impacts of climate change on the flood hazard. However, the uncertainty in these outputs is not often quantified or characterised, making the decision-making process more challenging and less reliable.

To account for uncertainty, planners may take a precautionary approach, such as adding a freeboard amount to required floor levels in flood zones or designing flood infrastructure such as stopbanks (levees) to a 1% annual exceedance probability (i.e., the 100-year average recurrence interval). However, this approach is questionable in an era of changing risk under climate change. For example, is the freeboard amount used sufficient to prevent serious damage from future floods? Will the area at flood risk increase? Will a current 100-year flood become a 50-year flood in future?

Some of these questions are aleatoric in nature: they will always be present and cannot be reduced. This includes issues such as the internal variability of the climate system, the implication of which is that, even if we had complete information about the future climate state, its chaotic nature means our flood risk assessments will still be uncertain. Other uncertainties are epistemic and are deterministic and subjective; the uncertainty contained in a flood risk assessment depends on how good (or bad!) are the data which are used within the analysis. Improving input data accuracy and model representations should, at least theoretically, reduce the inherent uncertainty in the predictions obtained and is something we always aim for.

Yet, even if we use the best possible data and model representations, uncertainty will still result from a complex combination of errors associate with source data, sampling and model representation – uncertainties which “cascade” through the risk assessment system (see right), reducing our confidence in any individual prediction and leading to variability in predicted depths and extents across multiple predictions which account for these errors (e.g., within Monte Carlo analysis). These uncertainties, here represented as variability in predicted depths and flows, further cascade through to the analysis of flood impacts.

Uncertainty in predicted depths and flows combine with errors from data such as those for buildings and infrastructure, and the statistical models used to quantify damage (e.g., via depth-damage curves). The end result is uncertainty in quantified damage for a flood scenario, creating issues for the decision-making processes such as determining whether to invest in improved mitigation measures.

Project Lead:

Dr. Emily Lane (NIWA).

GRI Team:

Matthew Wilson (uncertainty lead), Dr. Rose Pearson (NIWA/ GRI visiting research fellow), Martin Nguyen, Andrea Pozo Estivariz

Funding

MBIE Endeavour Research Programme